Gender bias in image labeling systems

Author: Jeff Lockhart

Date: 19 November 2020

[A version of this blogpost appeared earlier on the (opens in a new window)scatterplot]

Gender bias is pervasive in our society generally, and in the tech industry and AI research community specifically. So it is no surprise that image labeling systems—tools that use AI to generate text describing pictures—produce both blatantly sexist and more subtly gender biased results.

In a new (opens in a new window)paper, out now and open access in Socius, my co-authors and I add more examples to the growing literature on gender bias in AI. More importantly, we provide a framework for researchers seeking to either investigate AI bias or to use potentially biased AI systems in their own work.

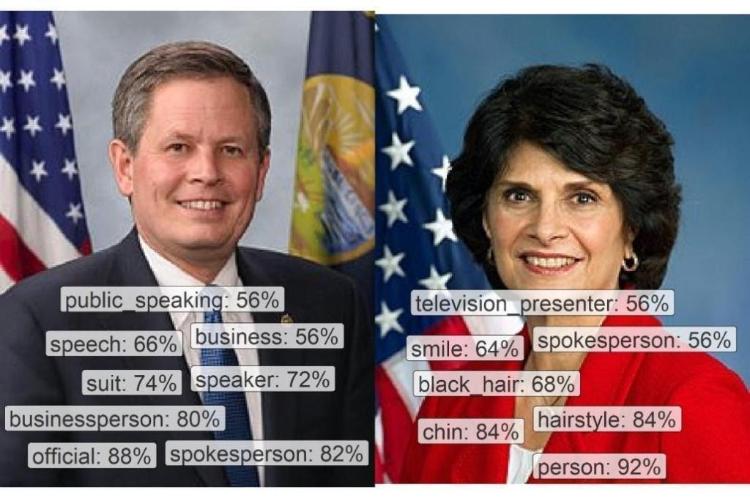

Two images of U.S. Members of Congress with their corresponding labels as assigned by Google Cloud Vision. On the left, Steve Daines, Republican Senator for Montana. On the right, Lucille Roybal-Allard, Democratic Representative for California’s 40th congressional district. Percentages next to labels denote confidence scores of Google Cloud Vision.

We set out to study how politicians use images on twitter, but quickly ran into questions of methodology that grew into a paper of their own. Automated labeling systems have a lot of promise for social scientific research. In just 18 months, US Congressmembers tweeted nearly 200,000 images. Analyzing a data set that size manually would require an enormous (and often prohibitive) amount of labor. Google, Amazon, Microsoft, and others offer AI tools that can produce labels / tags / codes for a data set that size in minutes, telling us what objects, occupations, activities, emotions, and more are in them. Automated labeling systems aren’t limited to images either: there are labeling systems that summarize text, tell us if a comment is spam, or estimate the sentiment of tweets. But as with text analysis and even traditional statistics, the advice to “always look at the data” holds for image labeling systems. So that’s what we did, and here are some key takeaways:

Skirting the catch-22 of measuring AI bias. Automated labeling systems are designed for use when we don’t have another source of ground truth data, yet evaluating those systems for bias requires having exactly that ground truth data. In other words, the systems are only useful when we can’t verify whether they’re good, which casts doubts on their usefulness. We avoided this problem by using two matched data sets: the images members of congress tweeted, and the professional portraits of the same members of congress. Our portrait data has ground truth demographic information (we know the age, race, gender, and occupation of members of congress). Importantly, it is also fairly uniform: the style, quality, and contents of congressional portraits are highly consistent. Yet unlike laboratory research that crops out clothing, jewelry, hair, etc., leaving the algorithm only racist, hetero- and cis-sexist (opens in a new window)physiognometric concepts like “(opens in a new window)bone structure” and “nose shape” to make inferences from, portrait data contain these socially relevant presentations of self that humans and the algorithm encounter in “real world” data like images posted to twitter. So if the algorithm treats men and women differently in our portrait data, we can be fairly confident it is evidence of bias that will manifest in other, less controlled data sets.

Obvious sexism and errors. Each labeling system has the same purpose, but they aren’t all equal. Microsoft Azure Computer Vision (MACV), for example, took portraits of congresswomen and labeled them things like laptop, umbrella, cake, kitchen, and plate. None of those objects were in any of the portraits; all are blatant errors. But labeling pictures of women with cake, kitchen, and plate when none of those items are present suggests that the algorithm has been trained to reproduce the sexist trope that the presence of women implies kitchens and food. All three commercial products we tested (MACV and its competitors from Google [GCV] and Amazon [AR]) labeled some of the congress women “girl.” AR also labeled women “kid” and “child.” None of the congressmen were labeled “boy,” but they did get things like “gentleman” in GCV. The youngest man and women in our data were both 34. Ashley Mears has (opens in a new window)written about how referring to adult women as “girls” fits into larger sexist systems.

Accuracy isn’t enough. The adage that “a picture is worth a thousand words” is exactly right. There are many, many words we could use to describe or label any given picture. Yet image labeling systems are generally designed to only return a small number of labels they have high confidence in. We find, along with many others, that if one of these systems says something (e.g. a (opens in a new window)cat) is in an image, that thing (the cat) is very likely there. But the converse is not true: if the system doesn’t return the label “cat,” it does not mean there is no cat in the image. It is possible, then, for a system to be both entirely correct in every label and also deeply biased at the same time. We use “conditional demographic parity” as our definition of bias. In short, if something is in an image, does the algorithm point it out equally often for different demographic groups? Nearly all of the people in our data have visible hair, and all are wearing professional clothing. Yet the algorithms systematically call attention to things like “hairstyle,” “apparel,” and even “cheek,” for women and not for their men colleagues. This point is critical for anyone hoping to use labeling algorithms. Right now, it is common in research uses to test the algorithms for accuracy – are the labels naming things that are really in the images – but outside of the ((opens in a new window)thriving) (opens in a new window)algorithmic (opens in a new window)bias (opens in a new window)literature, actual users of these algorithms rarely check for conditional demographic parity.

Bias can confound “unrelated” research questions. For example, the algorithms told us that Democrats tweet more pictures of children and girls than Republicans do. However, there were more women among congressional Democrats, and the algorithms labeled adult women as girls and children. Thus the finding about Democrats using more images of children in their social media might be an artifact of the algorithms’ bias: perhaps all members of congress tweet pictures of themselves at the same rate, but the algorithm labels the women as children more often than the men, and more of the Democrats are women. There is no way to know without an unbiased audit of the twitter images.

Old biases are hard to shake. To their credit, the GCV team have made all of their occupation labels gender-neutral: “spokesperson,” “television presenter,” “businessperson,” and so on. They also recently (opens in a new window)removed gender recognition from their system, so users can no longer use it to guess the gender of people in pictures. This is an (opens in a new window)important step! Yet Google’s tool does guess the occupation of people in pictures, and it guesses occupations in gender-biased ways. All of our portraits depict the same occupation: member of congress. Yet GCV labeled the men businessperson, spokesperson, white collar worker, military officer, and hotel manager way more often than women. The only occupation assigned to women more than men was television presenter. And yup: there’s a sexism story behind that. In the 1950s in the US, television stations started hiring non-expert “(opens in a new window)weather girls” as presenters to attract viewers through theatrics and sex appeal. The GCV team may have made their occupation names gender neutral, but they still built a system that encoded the gendered occupation trope: someone is a “spokesperson” if they are a man, with the knowledge and authority that implies. They are a “television presenter” if they are a woman, inexpert and valued for their looks.

There’s a lot more in the paper, of course. You can find the open access paper (opens in a new window)here, and the replication materials (opens in a new window)here.

About the author: (opens in a new window)Jeffrey W. Lockhart is a sociology PhD candidate at the University of Michigan, with previous graduate degrees in computer science and gender studies. His research interests include gender, sexuality, science, knowledge, and technology.