Generative AI refers to a subset of artificial intelligence that focuses on creating or generating new data, content, or information that is similar to what a human might produce. This type of AI system is designed to generate outputs that resemble human-created content in various domains, such as text, images, music, and more. Generative AI models are trained on large datasets and are capable of learning patterns and structures within that data. They use this learned knowledge to produce new content that is consistent with the patterns they have learned. Examples of generative AI include Bing, ChatGPT, and Midjourney. It does not include predictive text functions or assistive technologies like Grammarly. (Source: ChatGPT)

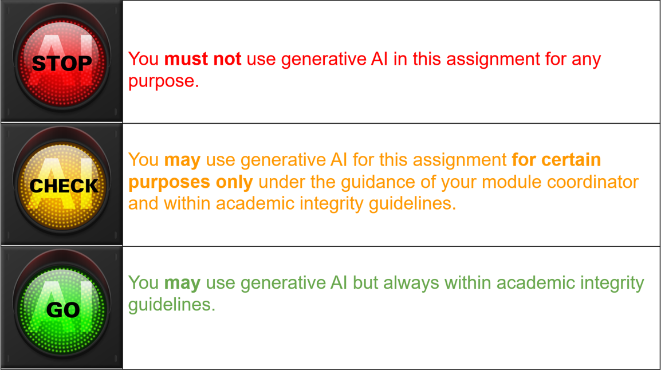

In spring 2024 UCD College of Arts and Humanities adopted a traffic light system to indicate whether or not students can use generative AI in their assignments. Under the traffic light system, individual assignments are marked red, amber or green to provide clear guidelines to students about whether or not they are permitted to use generative AI in their work. The traffic light system aims to provide clear guidelines to students about whether or not they can use generative AI in their assignments and to promote a culture of transparency around the use of generative AI.

Currently there is no general UCD policy on the use of generative AI. However, you are bound by the Student Code of Conduct, which states that academic integrity is a fundamental principle underpinning all academic activity at UCD. Here is the link to UCD's Academic Integrity Policy.

Your school/s, subject/s and/or module/s may have specific guidelines on the use of generative AI in assessment so please ask your module coordinator for advice. The UCD Student Plagiarism Policy defines plagiarism as ‘the inclusion, in any form of assessment, of material without due acknowledgement of its original source’. This means that if you are permitted to use generative AI in your assessment, you must explicitly acknowledge its use.

As a language prediction tool, generative AI has no understanding of the content it produces, which may include errors, inaccuracies, and omissions. In this context, citing generative AI as a source amounts to poor academic practice. However, if you use it as a source then you must cite it as a source.

Generative AI may produce convincing-looking prose in response to a question or prompt. However, there is a high risk of inconsistencies, inaccuracies, errors, and omissions in the content produced. This is because generative AI understands patterns in language but not its meaning. So generative AI may produce incorrect information, invented quotes, erroneous attributions, and non-existent authors. It may be difficult for a novice to identify errors that are obvious to subject experts.

Generative AI was not developed for educational purposes and its potential impact on teaching, learning, and assessment is only beginning to be evaluated. But some potential risks include:

- Plagiarism or academic misconduct through the use of generative AI for content generation.

- Reproducing biases, inaccuracies or omissions through the uncritical use of generative AI.

- Superficial insights because generative AI cannot understand the depth and context of academic topics.

- A decline in original thinking, creativity, and independent research skills through over-reliance on generative AI.

Please be aware that specific school/s, subject/s, module/s, and assignment/s may not permit the use of generative AI and/or may have clear guidelines on what is or is not permissible. In Spring 2024 UCD College of Arts and Humanities adopted a traffic light system to indicate whether or not students can use generative AI in their assignments. Under the traffic light system, individual assignments are marked red, amber or green to provide clear guidelines to students about whether or not they are permitted to use generative AI in their work.

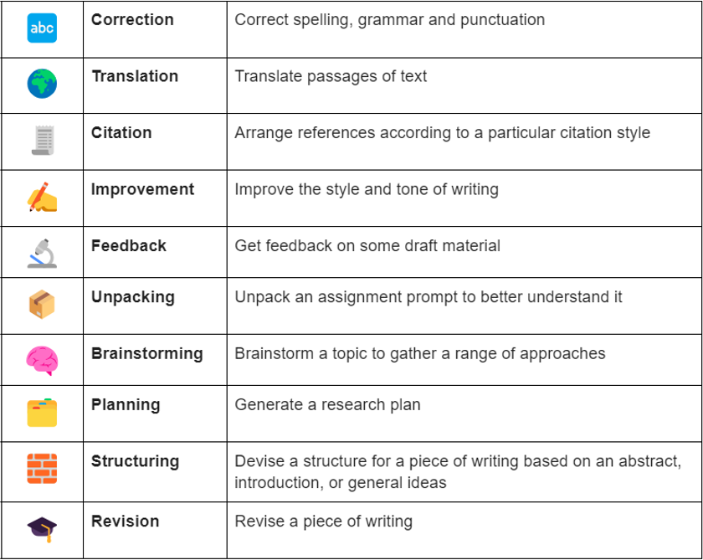

If yours is an amber or green assignment that permits (some) use of generative AI, you may choose to use it in a range of ways. With an amber assignment your module coordinator will set the limits on potential uses; with green you will set the limits yourself. Some examples of potential educational uses of generative AI are shared below. Please note that these will not be suitable or beneficial for all types of assignments so please be guided by your module coordinator if you are unsure.

If you are permitted to use generative AI, and choose to use it in any of these ways, you must do so critically, ethically and responsibly, and you must acknowledge its use. As a general rule, students should not use generative AI to generate new content as this is poor academic practice and (if unacknowledged) amounts to plagiarism.

It may be that you are not allowed to use generative AI in your assignments; please check with your module coordinator. If you are permitted to use generative AI in a particular assignment, your module coordinator will give you guidance on how to acknowledge its use in one of the following ways:

- Adapting normal citation practices, with generative AI cited in parentheses/footnotes/endnotes and then listed in the bibliography or works cited, according to the specific style guide (e.g. Harvard, MLA).

- Supplying as an appendix to the assignment copies of prompts and/or conversations with generative AI.

- Showing track changes or providing access to your document history to show your writing, revision, and editing process.

- Writing a critical reflection on how you used generative AI in the preparation of your assignment and what you learned from the process.

There are several reasons to be concerned about the emergence of generative AI.

- (opens in a new window)Lack of regulation

- (opens in a new window)Commercialization

- (opens in a new window)Privacy and intellectual property concerns

- (opens in a new window)Biases

- (opens in a new window)Human labour costs

- (opens in a new window)Environmental impact

- (opens in a new window)Plagiarism risk

- (opens in a new window)AI colonialism

Yes, it is ok to ask your lecturers and tutors about generative AI. But please be aware that this is a new and rapidly evolving technology and they are learning at the same time as you! You should also know that among the Arts and Humanities faculty and staff there are many differences of opinion about whether generative AI has any place in Higher Education. There may also be good academic reasons why you may be allowed to use generative AI in one assignment but not in another.

Ask your module coordinator. Many modules in the College have adopted a ‘traffic light’ system to indicate whether or not you can use generative AI in your academic work.

Yes, there are a number of ways to detect AI-generated text, including specialist software and staff knowledge and expertise in the area.

No, you do not have to acknowledge the use of assistive technologies provided through UCD Disability Services. Module coordinators are already aware of your use of these tools through your list of accommodations.

You can access (opens in a new window)Google Gemini (formerly Bard) through your UCD student Google Workplace account. Gemini offers similar functionality to Open AI’s (opens in a new window)ChatGPT and means you can experiment with generative AI without setting up a third party account. You must set up an account to access ChatGPT, which is currently available in a free version (GPT 3.5) and a paid-for version (GPT 4). You may notice that generative AI is becoming increasingly integrated with familiar tools, e.g. a new AI tool, ‘Help Me Write’, is being introduced to Google Docs.

Note on Use of Generative AI

ChatGPT (GPT 3.5) was used to generate the definition to question 1, ‘What is generative AI?’ because it is a generic question and we were able to verify the answer. Generative AI was not used to create any of the other FAQs. This is because we were using the questions and answers to think through the complexities of generative AI in teaching, learning and assessment in our College and University. Using generative AI would have obstructed this important critically reflective process.